Debug-gym

Large Language Models (LLMs) are widely used for coding, but they primarily operate coding tasks in a “static” (i.e., single-turn) manner, reflecting the type of data they have been trained on. We propose that LLMs could perform better by interactively exploring codebases. To support this, we introduce debug-gym—a lightweight textual environment with tools like pdb—that enables LLM agents to debug and generate code more effectively. This approach can also extend to other tasks that benefit from active information gathering.

AI coding tools are rapidly evolving, but their biggest limitation remains debugging—an essential and time-consuming part of software development. Debug-gym, a new open-source research environment from Microsoft, is designed to change that. It helps AI agents learn to debug code the way human developers do: interactively, iteratively, and with the right tools.

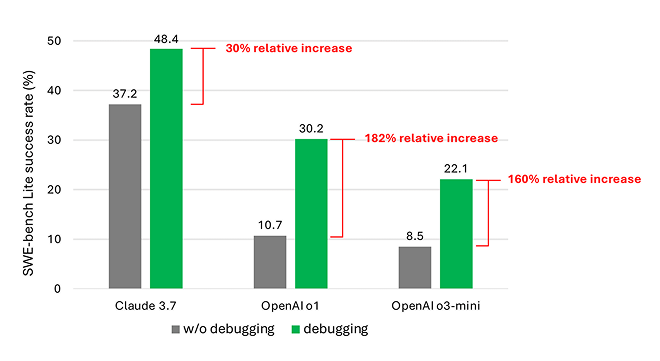

Unlike current AI systems that passively suggest fixes based on error messages, debug-gym teaches agents to access tools. For example, access to Python’s pdb debugger, enables AI coding agents to set breakpoints, inspect variable values, navigate large codebases, and even write custom tests. Debug-gym includes three coding benchmarks to measure LLM-based agents’ performance in interactive debugging: Aider for simple function-level code generation, Mini-nightmare for short, hand-crafted buggy code examples, and SWE-bench for real-world coding problems requiring a comprehensive understanding of a large codebase and a solution in the format of a GitHub pull request. Integration with swe-smith is coming soon. Early experiments show promising improvements in agents’ performance when they are given access to tools, especially on complex, real-world coding tasks. Microsoft invites researchers and developers to explore this next frontier in AI-assisted programming. Try debug-gym and build smarter, more useful AI tools that don’t just generate code, but also improve it.