Author: Evan

-

MatterSim

MatterSim is a deep learning model for accurate, efficient materials simulation and property prediction across a wide range of elements, temperatures, and pressures. It enables in silico materials design—supporting metals, oxides, sulfides, halides, and more across crystalline, amorphous, and liquid states.

-

TamGen

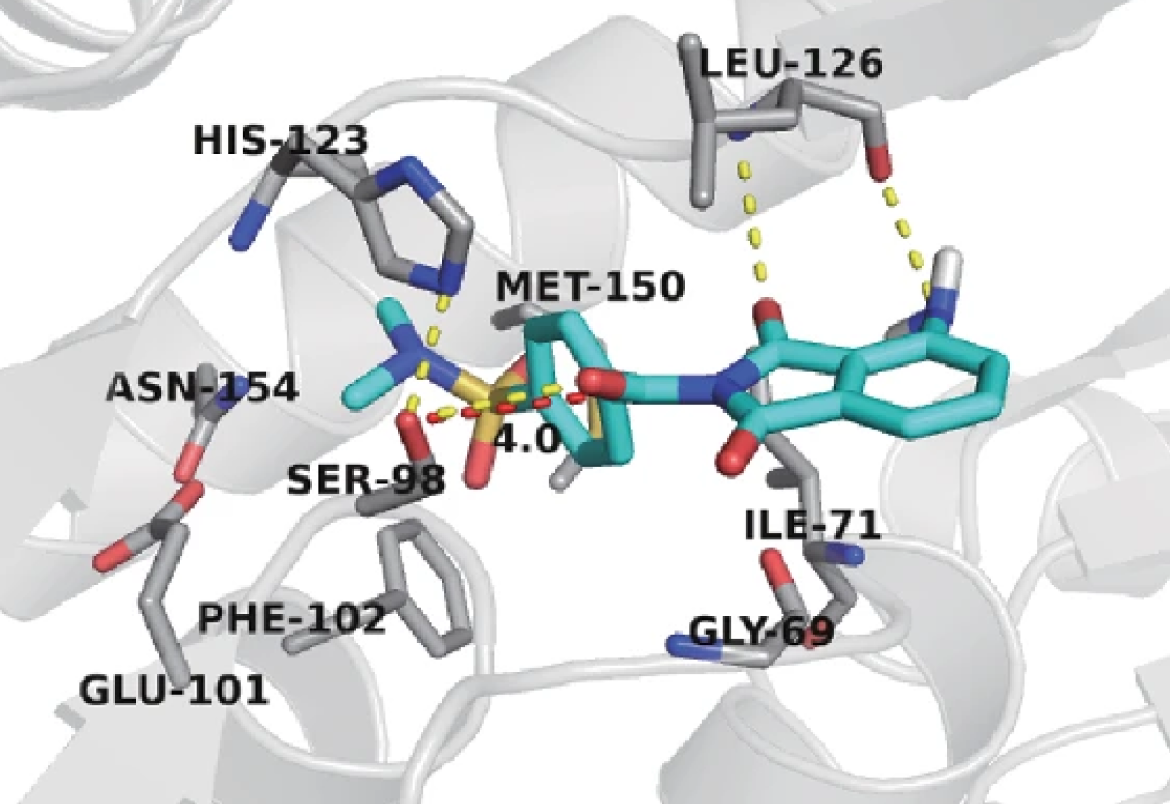

TamGen is a transformer-based chemical language model for target-specific drug design. It can generate novel compounds or optimize existing ones by creating target-aware fragments—accelerating discovery, reducing costs, and highlighting the promise of generative AI in advancing new treatments.

-

Aurora

Aurora is a large-scale foundation model for atmospheric forecasting. Trained on over a million hours of weather and climate simulations, it predicts variables like wind, temperature, and air quality with high resolution and speed—helping anticipate and mitigate extreme weather impacts.

-

OmniParser V2

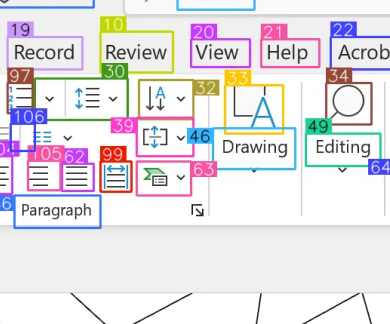

OmniParser V2 is a pioneering screen parsing module that turns user interfaces into actionable elements through visual input. By recognizing and mapping UI components, it enables more robust automation, helping agents interact with apps and workflows more effectively across platforms.

-

Magentic-One

Magentic-One is a generalist multi-agent system for complex web and file-based tasks. With an Orchestrator coordinating specialized agents, it automates multi-step workflows across environments—using task and progress ledgers to plan, adapt, and optimize actions for efficient problem-solving.

-

ExACT

ExACT is an approach for teaching AI agents to explore more effectively, enabling them to intelligently navigate their environments, gather valuable information, evaluate options, and identify optimal decision-making and planning strategies.

-

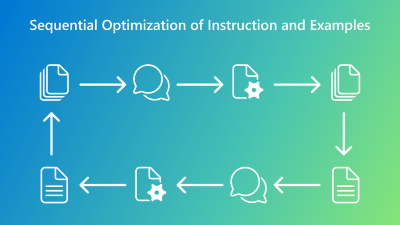

PromptWizard

romptWizard is a self-evolving framework that automates prompt optimization. By iteratively refining instructions and examples with model feedback, it delivers task-aware, high-quality prompts in minutes—combining expert reasoning, joint optimization, and adaptability across diverse use cases.

-

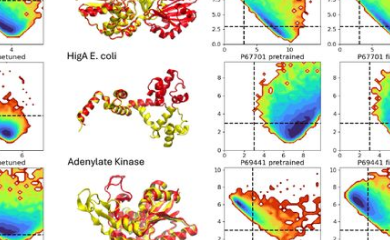

BioEmu-1

BioEmu-1 is a deep learning model that generates thousands of protein structures per hour on a single GPU—vastly more efficient than classical simulations. By modeling structural ensembles, it opens new insights into how proteins function and accelerates drug discovery and biomedical research.

-

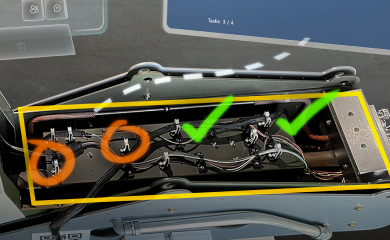

Magma

Magma is a multimodal foundation model that perceives text and visuals to generate actions in both digital and physical environments. From navigating UIs to manipulating real-world tools, it advances the vision of general-purpose AI assistants that integrate seamlessly across contexts.

-

Microsoft Phi-4

Phi-4 expands Microsoft’s small language model family with two new releases: Phi-4-multimodal, integrating speech, vision, and text for richer interactions, and Phi-4-mini, a compact model excelling at reasoning, coding, and long-context tasks. Together, they enable efficient, versatile AI across apps and devices.

-

Muse

Muse is a World and Human Action Model (WHAM) developed by Microsoft Research with Ninja Theory. Trained on the game Bleeding Edge, it can generate visuals, controller actions, or both—showcasing how generative AI could accelerate creativity and interactive design in gaming.