Category: Uncategorized

-

MatterGen

MatterGen is a diffusion-based generative model for inorganic materials design. It can propose stable, novel crystal structures and be guided by target properties like bulk modulus, band gap, or magnetic density, accelerating materials discovery.

-

CalcLM

CalcLM is a prototype from Azure AI Foundry Labs that experiments with bringing agentic AI into the grid interface of Excel. It enables users to express agent steps as formulas, chain tasks across cells, and quickly explore how simple agent workflows might flow in a familiar surface.

-

Debug-gym

Debug-gym is an open-source research environment for teaching AI coding agents to debug more like humans—interactively and iteratively. With tools like Python’s pdb, agents can set breakpoints, inspect code, and run tests, enabling smarter, more reliable coding workflows.

-

Trellis

Trellis is a research system for generating editable 3D assets from simple text or image prompts. Using a novel latent representation, it produces meshes, radiance fields, and 3D Gaussians with rich texture and structure—accelerating workflows in gaming, AR/VR, digital twins, and industrial design.

-

NextCoder

NextCoder is a research model series designed to improve code editing. Using a novel synthetic data pipeline and the SeleKT adaptation algorithm, it teaches language models to handle diverse edit requirements while retaining strong code generation skills, outperforming peers across multiple benchmarks.

-

MSR-ACC

MSR-ACC introduces Skala, a deep-learning-based exchange-correlation functional that achieves experimental accuracy in density functional theory. Trained on the largest high-accuracy dataset of molecular energies, Skala advances computational chemistry with reliable, scalable predictions for molecules and materials.

-

TypeAgent

TypeAgent is research sample code exploring how to build a single personal agent with natural language interfaces. By distilling language models into logical structures for actions, memory, and plans, it enables safer, faster, and lower-cost ways to map user requests into meaningful applications.

-

Magnetic-UI

Magentic-UI is a research platform for advancing human-in-the-loop AI experiences. It explores co-planning, co-tasking, and task learning. Features like action guards and safe sandboxes enable more trustworthy human-AI collaboration.

-

Project Amelie

Project Amelie is the first Foundry autonomous agent designed to perform machine learning engineering tasks. With natural language prompts, it generates validated ML pipelines, evaluation metrics, trained models, and reproducible code—advancing automation in research and development.

-

MCP Server

The MCP Server for Azure AI Foundry Labs equips GitHub Copilot with custom tools for model discovery, integration guidance, and rapid prototyping. By streamlining workflows and reducing the idea-to-prototype cycle to under 10 minutes, it accelerates research adoption and boosts developer productivity.

-

EvoDiff

EvoDiff is a diffusion framework for controllable protein generation in sequence space. Trained on evolutionary-scale data, it produces high-fidelity, diverse, and structurally plausible proteins, enabling novel designs beyond structure-based models and advancing sequence-first protein engineering.

-

PEACE

PEACE enhances multimodal large language models with geologic expertise, enabling accurate interpretation of complex maps. By combining structured extraction, domain knowledge, and reasoning, it supports disaster risk assessment, resource exploration, and infrastructure planning—transforming general AI into a specialized geoscience tool.

-

ReMe

ReMe is a web-based framework that helps researchers build AI chatbots for personalized memory and cognitive training. By combining puzzle tasks, life-logging, and multimodal interaction, it enables more engaging, adaptable interventions to advance digital health and non-pharmacological approaches to cognitive care.

-

BitNet

BitNet is the first open-source, native 1-bit large language model, with every parameter represented as −1, 0, or 1. Scaled to 2 billion parameters, it demonstrates how ternary LLMs can achieve strong performance while dramatically reducing memory, compute, and energy requirements for AI training and inference.

-

MatterSim

MatterSim is a deep learning model for accurate, efficient materials simulation and property prediction across a wide range of elements, temperatures, and pressures. It enables in silico materials design—supporting metals, oxides, sulfides, halides, and more across crystalline, amorphous, and liquid states.

-

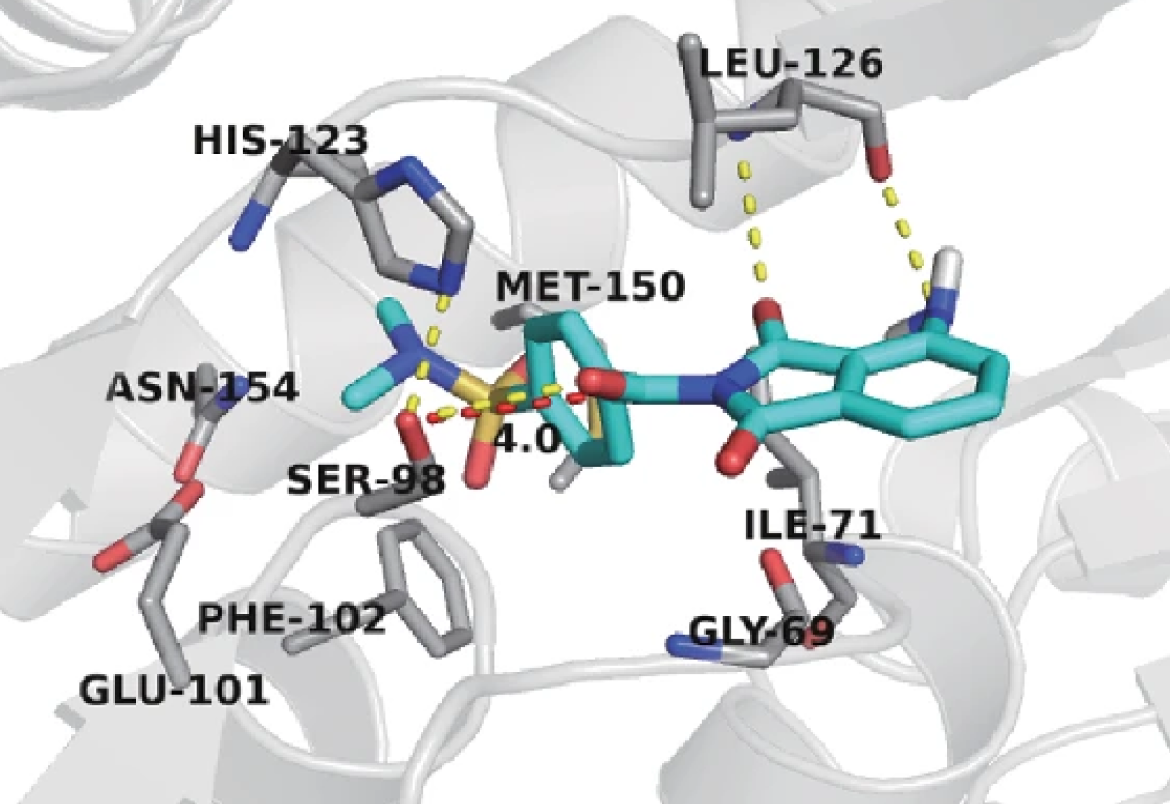

TamGen

TamGen is a transformer-based chemical language model for target-specific drug design. It can generate novel compounds or optimize existing ones by creating target-aware fragments—accelerating discovery, reducing costs, and highlighting the promise of generative AI in advancing new treatments.

-

Aurora

Aurora is a large-scale foundation model for atmospheric forecasting. Trained on over a million hours of weather and climate simulations, it predicts variables like wind, temperature, and air quality with high resolution and speed—helping anticipate and mitigate extreme weather impacts.

-

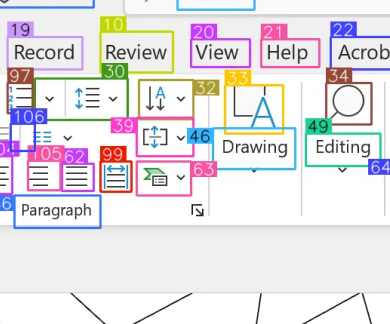

OmniParser V2

OmniParser V2 is a pioneering screen parsing module that turns user interfaces into actionable elements through visual input. By recognizing and mapping UI components, it enables more robust automation, helping agents interact with apps and workflows more effectively across platforms.

-

Magentic-One

Magentic-One is a generalist multi-agent system for complex web and file-based tasks. With an Orchestrator coordinating specialized agents, it automates multi-step workflows across environments—using task and progress ledgers to plan, adapt, and optimize actions for efficient problem-solving.

-

ExACT

ExACT is an approach for teaching AI agents to explore more effectively, enabling them to intelligently navigate their environments, gather valuable information, evaluate options, and identify optimal decision-making and planning strategies.

-

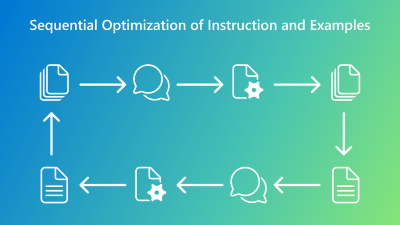

PromptWizard

romptWizard is a self-evolving framework that automates prompt optimization. By iteratively refining instructions and examples with model feedback, it delivers task-aware, high-quality prompts in minutes—combining expert reasoning, joint optimization, and adaptability across diverse use cases.

-

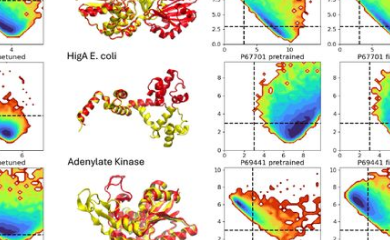

BioEmu-1

BioEmu-1 is a deep learning model that generates thousands of protein structures per hour on a single GPU—vastly more efficient than classical simulations. By modeling structural ensembles, it opens new insights into how proteins function and accelerates drug discovery and biomedical research.

-

Magma

Magma is a multimodal foundation model that perceives text and visuals to generate actions in both digital and physical environments. From navigating UIs to manipulating real-world tools, it advances the vision of general-purpose AI assistants that integrate seamlessly across contexts.

-

Microsoft Phi-4

Phi-4 expands Microsoft’s small language model family with two new releases: Phi-4-multimodal, integrating speech, vision, and text for richer interactions, and Phi-4-mini, a compact model excelling at reasoning, coding, and long-context tasks. Together, they enable efficient, versatile AI across apps and devices.

-

Muse

Muse is a World and Human Action Model (WHAM) developed by Microsoft Research with Ninja Theory. Trained on the game Bleeding Edge, it can generate visuals, controller actions, or both—showcasing how generative AI could accelerate creativity and interactive design in gaming.